Navigating the NIST AI RMF v1.0

This post is Part 2 of a two-part series on NIST’s AI Risk Management Framework. Be sure to check out Part 1 for an introduction to the AI RMF and a look at the Govern and Map functions.

Picking Up from Part 1: From Mapping Risks to Measuring and Managing

In Part 1, we introduced the NIST AI Risk Management Framework (AI RMF 1.0) and covered its first two core functions: Govern (establishing AI risk governance and culture) and Map (identifying and contextualizing AI risks). Now, in Part 2, we’ll dive into the remaining two functions – Measure and Manage – and discuss how to put them into practice. We’ll also look at practical strategies and tools you can use, honestly assess some limitations of the framework, and provide a simple checklist to help you apply these concepts to your own AI initiatives.

The Measure Function: Assessing and Quantifying AI Risks

The Measure function is all about analyzing and quantifying the risks identified in the Map stage[1]. In practical terms, this means developing ways to evaluate your AI system’s performance and behavior against your risk criteria, so you have evidence and metrics to inform decisions. Under Measure, an organization sets up processes to track and monitor an AI system’s trustworthiness characteristics over time – things like fairness, accuracy, robustness, security, and privacy.

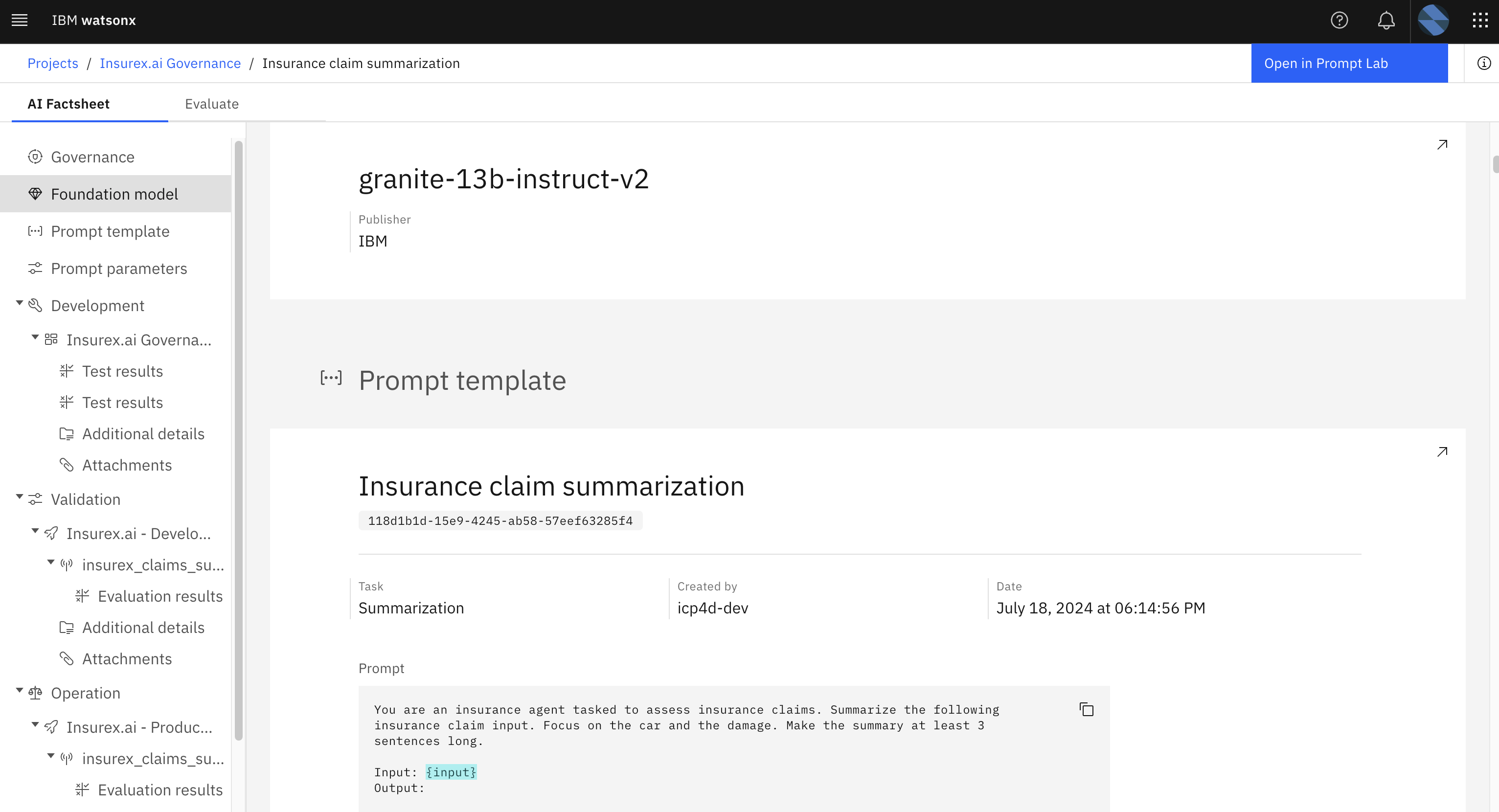

Example AI risk metrics dashboard (IBM’s watsonx.governance platform). This dashboard displays model compliance status, metric breach rates, provider breakdown, and use-case risk summaries for deployed AI models. Such visualizations help monitor accuracy, fairness, and operational risks of AI systems over time.

What does “measuring AI risk” involve? At a high level, it involves defining metrics, tests, or evaluation techniques that correspond to your risks[2]. For example, if bias is a risk, you might measure and monitor bias indicators in your model’s outputs. If security is a concern, you might conduct adversarial attack tests. Some common measurement activities include:

- Performance Metrics: Evaluate model accuracy, error rates, precision/recall, etc., to understand how reliably the AI performs[2]. Set thresholds for acceptable performance.

- Fairness & Bias Audits: Use fairness indicators or bias tests to detect unwanted bias in predictions (e.g. checking outcomes across different demographic groups)[2]. Measure and document any disparities.

- Robustness & Security Testing: Conduct stress tests or adversarial testing to see how the AI system holds up against attacks or noisy inputs[2]. Identify vulnerabilities like susceptibility to manipulation or data poisoning.

- Explainability & Transparency Checks: Evaluate how interpretable the model’s decisions are (if explainability is a goal), perhaps by using explainability toolkits to generate explanations and seeing if they make sense to humans.

The Measure function isn’t just one-and-done. It requires continuous monitoring. You’ll want to establish ongoing tracking of these metrics (accuracy, bias, etc.) and define what “unacceptable” looks like[5][6]. For instance, you might set a rule that “if the model’s accuracy drops below X% or if bias metric exceeds Y, trigger an alert or review.” This way, Measure gives you an early warning system for emerging issues. It turns nebulous risks into concrete observations that can be documented and communicated.

Crucially, Measure provides the evidence base for managing AI risks. By quantifying how likely and severe certain risks are (or tracking proxies for them), you arm your team – and leadership – with data to make informed decisions[1]. Measure can involve both quantitative metrics and qualitative assessments (for aspects that are hard to quantify, like “societal impact”). The outcome of this function is a risk assessment knowledge base: you’ve measured where things stand, so you know which risks are minor and which ones could be show-stoppers.

The Manage Function: Mitigating and Monitoring AI Risks

If Map identified the risks and Measure assessed them, the Manage function is where you take action on those insights. Manage is all about prioritizing and treating AI risks so that they stay within acceptable levels[1]. In other words, this is the risk response phase: deciding what to do about the risks you’ve identified and measured.

Key activities in Manage include:

- Prioritize Risks: Not all risks are equal. Under Manage, you’d rank which risks need attention first (often those with highest potential harm or likelihood). For example, if an AI system in healthcare has a small accuracy issue and a severe bias issue, you might prioritize fixing the bias because it could lead to unfair outcomes.

- Mitigation Strategies: For each significant risk, implement controls or safeguards to reduce it[1]. Mitigations can be technical fixes (e.g., retraining a model with more balanced data to reduce bias, adding noise to improve privacy, strengthening access controls around the model) or process changes (e.g., having humans double-check AI decisions, setting up approval workflows for certain AI actions, instituting an AI ethics review board). The NIST framework suggests that sometimes the best mitigation may be not deploying an AI at all if the risk is too high to mitigate effectively[1].

- Incident Response Planning: Despite our best efforts, things will go wrong. Manage includes preparing for AI-related incidents[1]. That means having a plan for when the AI system does something unexpected or harmful – who gets alerted, how to shut it down or roll it back, how to communicate the issue, etc. For example, if your chatbot starts giving dangerous advice due to a flaw, an incident plan might dictate pulling it offline immediately and issuing an apology.

- Ongoing Monitoring and Adjustment: Manage is not “one and done.” Just as AI systems evolve, your risk mitigations need to evolve. This function emphasizes continuous monitoring of the system post-deployment and updating risk treatments as needed[5]. If your model’s behavior drifts or new risks emerge (say, new regulations or new attack techniques), you loop back and adjust your controls. In practice, this might mean regular model check-ins, periodic audits, and a living AI risk register that’s reviewed by stakeholders on an ongoing basis.

Think of Manage as the “action and control” phase of the AI RMF. After using Measure to determine which fires are burning hottest, Manage is about grabbing the extinguisher and putting fire breaks in place for the future. This could range from technical interventions (retraining a model, applying bias mitigation algorithms) to organizational policies (like changing how users interact with the AI, or mandating additional approvals for high-risk uses). It’s an iterative process: manage what you know now, monitor the AI system and environment for changes, then adjust. NIST explicitly notes that risk management for AI should be a continuous loop, not a checklist you complete once[1].

Putting It Into Practice: Implementing the AI RMF (Tools & Techniques)

Knowing the theory behind Map-Measure-Manage is one thing – implementing it in a real organization is another. The AI RMF is voluntary and flexible, which means there’s no one-size-fits-all approach. Here we’ll outline some practical steps and tips to operationalize AI risk management using the framework. Consider this a rough roadmap for applying Govern→Map→Measure→Manage in your context (adapted from NIST’s guidance and industry best practices):

- Learn and Prepare: Start by educating your team about the AI RMF and why it matters. Run internal workshops or training sessions on the framework’s core concepts and terminology[2]. Leadership buy-in is crucial – make sure executives understand the value of managing AI risks (you might frame it as preventing future scandals, compliance issues, or safety incidents). Getting that “tone from the top” will help establish a risk-aware culture from the beginning. Also, gauge your organization’s current maturity: do you already have some AI governance practices, or is this brand new? This will influence how you roll out the next steps.

- Identify Your AI Systems: You can’t manage what you don’t know exists. Take an inventory of all AI/ML systems in your organization (including those in development)[3]. For each system, capture basic info: What is its purpose? What data does it use? Where is it deployed? Who owns it? This is essentially the start of the Map function – establishing context. Involve people who know each system well (developers, product managers, etc.) to get a full picture. The result should be a list of AI systems with descriptions that you can then assess for risk.

- Map the Risks: For each AI system identified, perform a risk assessment (brainstorm or analysis) to map out potential failure modes, impacts, and stakeholders[4]. Ask the tough questions: “What’s the worst that could happen if this system fails or is misused?” “Could it harm someone or violate laws?” “Who would be affected and how?” Consider technical risks (e.g. model errors, bias, security vulnerabilities) as well as societal/ethical risks (e.g. unfair outcomes, privacy invasion). It’s often helpful to bring diverse perspectives into this process – different team members or even external stakeholders can surface risks you might miss. Document these risks in a consistent format (creating an “AI risk register” or similar).

- Prioritize Risks: Once you have a laundry list of risks, categorize and prioritize them[1]. Not every AI system will warrant the same level of scrutiny. A common approach is to classify systems (or individual risk scenarios) as high, medium, or low risk. This depends on factors like the severity of potential harm and the likelihood of occurrence. For example, an AI that recommends movies might be low risk, whereas an AI used in medical diagnosis or loan approvals is high risk. Focus your resources on the high-risk areas first. NIST’s framework is meant for all AI systems, but it’s realistic to tier your approach – you’ll apply the most rigorous controls to what matters most[1].

- Measure and Evaluate: Now implement the Measure function for the most important risks. Set up the metrics, tests, and monitoring processes as discussed earlier. For each high-priority AI system, you should determine how you will detect issues and gauge performance over time[1][2]. This could involve using existing tools or developing custom evaluation scripts. For instance, there are open-source toolkits for AI fairness (like IBM’s AIF360 or Microsoft Fairlearn) that can help measure bias; security testing frameworks to probe ML models; and various dashboards for monitoring model drift. Define what success and failure look like (e.g., “model must remain above X accuracy and below Y bias” or “no high-severity vulnerabilities in pen-tests”). Importantly, document your measurement approach – what you’re measuring and why – so it’s transparent[6].

- Mitigate Risks: Next, for the biggest issues, take action per the Manage function. This step is about designing and implementing risk controls[1]. If your Map/Measure steps revealed, say, a significant bias in your model, mitigation might involve retraining the model with more diverse data or applying algorithmic bias mitigation techniques. If there’s a risk of the AI making unsafe decisions, a mitigation could be adding a human review step or a rule-based failsafe. Leverage both technical fixes and policy/process changes. NIST’s companion AI RMF Playbook (an interactive resource) can be useful here – it offers suggested actions and references for various outcomes in the framework[1]. For example, if one recommended outcome is to “increase transparency,” the Playbook might point you to specific documentation standards or tools as a way to achieve that[1][2]. Assign owners and deadlines for each mitigation so that it actually gets implemented.

- Test and Validate: Risk management isn’t static. Establish a routine for testing your AI systems and the effectiveness of your mitigations[5]. This could mean scheduling periodic audits – e.g., run a bias audit every quarter, conduct a security penetration test every six months, etc. – whatever makes sense for the pace of your AI deployments. Continuously monitor the metrics you defined in Measure. Set up alerts or review triggers when something drifts out of bounds[5]. The goal is to catch problems early, before they lead to real harm or compliance issues. If testing uncovers new risks or shows that a mitigation isn’t working as intended, feed that insight back into your risk management process (maybe you need to refine the model further, update a policy, or in some cases rethink the entire system).

- Document Everything: Throughout this process, maintain thorough documentation – it’s one of the unsung heroes of risk management. Keep records of your risk assessments, decisions made, metrics collected, and mitigations applied[1]. Good documentation provides accountability and traceability. It also prepares you for the day you might need to demonstrate your AI risk practices to regulators, auditors, or customers. Consider maintaining an AI Risk Register or a “risk log” for each AI system that gets updated over time. NIST’s framework puts a strong emphasis on transparency and record-keeping as a trust mechanism[1].

- Train and Educate Staff: People are at the core of AI risk management. Make sure to train your teams on their roles and responsibilities in this framework[25]. Developers might need training on bias mitigation techniques or secure coding for ML; project managers might need to know when to escalate an AI risk to higher management; legal or compliance staff might need training on AI ethics guidelines. Build AI risk awareness into onboarding and ongoing learning. The more your workforce understands the why and how of AI risk controls, the more naturally these practices will be adopted.

- Engage Stakeholders: AI risk can’t be managed in a silo. Proactively engage a wide range of stakeholders in your AI projects[26]. Internally, this means collaboration between data scientists, engineers, security teams, legal, compliance, HR, etc., depending on the use case. Externally, consider reaching out to end-users, domain experts, or impacted communities for feedback, especially for high-stakes AI applications. For example, if deploying an AI in a healthcare setting, engage clinicians and patients in evaluating its impact. This not only helps catch issues that insiders might overlook, but also builds trust – people see that you’re being transparent and inclusive in how you manage AI.

- Monitor and Adapt: Finally, treat the AI RMF process as continuous and adaptive. Set up a cadence (perhaps an AI risk committee that meets periodically) to review the overall risk landscape and update your approach as needed[27]. As AI tech evolves and your use of AI grows, you might face new types of risks that weren’t on your radar initially (e.g., new regulations, or new kinds of AI like generative models bringing new concerns). Be ready to iterate. The framework itself is meant to be a living document – NIST has said they will update it over time – so your implementation should be living too. Don’t hesitate to tweak your processes, add new metrics, or improve controls as lessons are learned.

Limitations and Criticisms of NIST AI RMF 1.0

No framework is perfect, and the AI RMF v1.0 has its share of limitations and challenges in practice. It’s important to be aware of these so you can address them or set proper expectations:

- Voluntary Framework (No Teeth): The AI RMF is entirely voluntary – there’s no certification or enforcement mechanism built in. While this flexibility encourages adoption, it also means organizations can claim they follow it without real action. Critics worry about “ethics washing,” where companies might tout adherence but not actually change anything. In fact, some advocacy groups (like EPIC) have urged NIST to include more accountability or independent audits, warning that without external checks, there’s little incentive for compliance beyond goodwill[9].

- Resource Intensive & Complexity: For small or less AI-mature organizations, the framework can feel daunting. AI RMF v1.0 is detailed, and implementing all its suggestions requires significant expertise, time, and effort[6]. If you’re a startup or a small team, you might not have a Chief AI Risk Officer or a dedicated cross-functional team ready to tackle all this. There’s concern that only Big Tech or large enterprises can fully implement the framework’s recommendations. NIST does emphasize that you should prioritize and not necessarily do everything at once, but the learning curve remains non-trivial. In practice, smaller organizations might struggle to allocate the necessary people and budget to AI risk management initiatives[6].

- Evolving AI, Evolving Framework: AI is a moving target. New risks (and best practices to mitigate them) are continuously emerging. The AI RMF v1.0 is a first version – by nature it may become outdated in parts as technology progresses[7]. For example, the rise of generative AI (like GPT models) brought new issues (hallucinations, prompt attacks, etc.) that aren’t deeply covered in the core framework. NIST has responded by releasing a Generative AI “Profile” in mid-2024 to supplement the framework for that domain[7]. But generally, users of the framework need to stay abreast of AI advancements and possibly extend the guidance on their own when they hit an area not explicitly covered. The framework tends to be outcome-oriented (“ensure your AI is fair”) without prescribing specific metrics or tools, which can leave some organizations asking “okay, but how exactly?”. Over time, as community feedback is gathered and new versions or profiles are released, some of these gaps will be filled – but for now, a lot of interpretation is left to the user.

- Lack of External Validation: Currently, there’s no official way to get “NIST AI RMF certified” or have a third party validate that you’re doing a good job with it. Some have suggested that an audit or certification ecosystem could emerge around the framework, similar to what we have for cybersecurity standards, but that’s not in place yet[8]. This ties back to the voluntary point – if you’re a consumer or regulator looking at a company claiming they use the AI RMF, you have to trust their word (unless they voluntarily publish documentation or invite third-party assessments). This limitation might be resolved in the future if industry groups or governments start baking the AI RMF into compliance regimes, but as of v1.0 it’s more of a guiding light than a compliance standard.

Despite these limitations, it’s widely acknowledged that the NIST AI RMF fills an important void in the AI ecosystem[8]. Before it, organizations had a bunch of high-level ethical AI principles and no concrete way to implement them. Now there’s at least a baseline process to follow. The framework’s imperfections (lack of teeth, complexity) are real, but they don’t negate its value as a foundation. It just means that using it effectively might require additional effort – like securing leadership commitment to overcome the voluntary problem, or using community resources (playbooks, case studies) to tackle the complexity problem. NIST is continuing to refine the framework, and we can expect updates and improvements as AI technology and governance approaches mature.

Quick AI RMF Checklist for Practitioners

As a wrap-up, here’s a simple checklist distilling some key practices from the AI RMF that you can apply to your AI projects. Think of these as guiding questions or tasks to ensure you’re covering the bases of AI risk management:

- ☑ Have we established AI governance? (Leadership support, roles and responsibilities defined, and a culture that encourages raising AI-related concerns.)

- ☑ Did we clearly define the context and scope of this AI system? (Purpose, intended use, stakeholders, and potential societal impact – the “Map” groundwork.)

- ☑ What are the top risks of this AI system and who/what could be harmed? (List out potential failure modes or misuse scenarios identified during risk mapping.)

- ☑ Do we have metrics or tests to monitor those risks? (If bias is a risk, how are we measuring bias? If security is a risk, are we testing for vulnerabilities? Set up the Measure mechanisms.)

- ☑ What thresholds or criteria will trigger a response? (E.g., “alert the team if accuracy drops below 90%” or “if more than 5% of outputs are flagged as problematic, pause deployment”. Define what unacceptable performance looks like.)

- ☑ What risk mitigations have we implemented? (Document the safeguards in place: model adjustments, human oversight, process changes, etc. – the Manage actions. Are they proportional to the level of risk?)

- ☑ Is there an incident response plan for AI failures? (Ensure you have a playbook if the AI goes awry – who to notify, how to pull the plug or fix the issue, and how to learn from it.)

- ☑ Are we documenting decisions and outcomes? (Maintain records of risk assessments, model changes, and incidents. This helps with accountability and improvement over time.)

- ☑ Are all relevant stakeholders involved and informed? (Cross-functional input in development, and transparency with users or those impacted by the AI. No one should be caught off guard by the AI’s behavior or our policies around it.)

- ☑ How will we keep this up to date? (Set a reminder to periodically review this system’s risks and controls. As the AI or its environment changes, loop back into Map–Measure–Manage again.)

This checklist isn’t exhaustive, but if you can comfortably check off each of these items, you’re in pretty good shape with managing your AI system’s risks! It means you’ve thought through governance, risk identification, measurement, mitigation, and continuous improvement – which are the essence of the NIST AI RMF in practice.

Conclusion

The NIST AI Risk Management Framework (AI RMF 1.0) represents a significant milestone in our collective journey toward trustworthy and responsible AI. Together, the four functions – Govern, Map, Measure, Manage – provide a holistic, structured approach for organizations to identify and tackle AI risks throughout the lifecycle of a system[1]. Rather than viewing AI risk as an abstract or overwhelming problem, the framework breaks it down into manageable pieces and concrete actions. For practitioners, it’s a relief to have this playbook: it takes high-level principles (like fairness, transparency, safety) and translates them into processes that engineers, risk officers, and decision-makers can actually work with on a daily basis.

Implementing the AI RMF isn’t without effort – as we discussed, it requires commitment and resources. But the payoff is worth it. By proactively managing risks, you’re aiming for AI systems that are reliable, fair, and safe, thereby avoiding unpleasant surprises, protecting users, and likely keeping regulators happy too[9]. In a very real sense, doing AI risk management early can save you from disasters (both in terms of failures and public trust) later on. There’s a saying in security that “the absence of incidents is a sign of success” – the same could be said for AI risk management: if your AI doesn’t cause headlines for the wrong reasons, that’s a win.

Looking ahead, we can expect the AI RMF to evolve as AI technology and society’s expectations evolve[7]. NIST and the community are actively working on updates (they’ve even begun releasing profiles for specific areas like generative AI). But no matter how it changes, the core message is likely to endure: managing AI risk is a continuous journey, and it’s a shared responsibility among all stakeholders. By adopting frameworks like the NIST AI RMF, we’re not trying to put the brakes on AI innovation – we’re building the guardrails that will help AI innovation stay on track in benefiting humanity. In the end, the goal is AI we can trust: systems that are not only intelligent, but also aligned with our values and robust against the risks we know (and even those we have yet to discover).

If you missed Part 1 of this, which covers the introduction to AI RMF and the Govern/Map functions, you can read it here.

References

[1] NIST AI Risk Management Framework (AI RMF) v1.0 (NIST SP 1270), available at https://nvlpubs.nist.gov/nistpubs/ai/nist.ai.100-1.pdf

[2] AuditBoard. “Safeguard the Future of AI: The Core Functions of the NIST AI RMF.” (blog). Available at https://auditboard.com/blog/nist-ai-rmf

[3] StandardFusion Blog. “What is the NIST AI Risk Management Framework (AI RMF)?” (Apr 2025). Available at https://www.standardfusion.com/blog/what-is-the-nist-ai-risk-management-framework-ai-rmf

[4] Lumenova Blog. “AI Accountability: Stakeholders in Responsible AI Practices.” (Sep 2024). Available at https://www.lumenova.ai/blog/responsible-ai-accountability-stakeholder-engagement/

[5] NIST AI RMF Core (via AIRC). “Govern 1.5: Ongoing monitoring and periodic review…” Available at https://airc.nist.gov/airmf-resources/airmf/5-sec-core/

[6] Securiti.ai. “Navigating the Challenges in NIST AI RMF.” Available at https://securiti.ai/challenges-in-nist-ai-rmf/

[7] Cleary Gottlieb Publications. “NIST’s New Generative AI Profile: 200+ Ways to Manage the Risks of Generative AI.” (Aug 2024). Available at https://www.clearygottlieb.com/news-and-insights/publication-listing/nists-new-generative-ai-profile-200-ways-to-manage-the-risks-of-generative-ai

[8] VentureBeat. “NIST releases new AI risk management framework for ‘trustworthy’ AI.” (Jan 2023). Available at https://venturebeat.com/2023/01/25/nist-ai-risk-management-framework-trustworthy-ai

[9] Diligent Insights. “NIST AI Risk Management Framework: A simple guide to smarter AI governance.” (Jul 2025). Available at https://www.diligent.com/resources/blog/nist-ai-risk-management-framework